The use of non-human content – text, music, images – to train models like ChatGPT, Stable Diffusion, and Midjourney results in irreversible defects in their product. This is the conclusion of a group of British and Canadian scientists who experimented with training models on content that other models had previously produced. For example, on texts produced by ChatGPT or images generated by Midjourney. The scientists published their findings on the portal for scientific publications arXiv.org.

One of the authors of the work compared the clogging of the Internet with generative content with the littering of the ocean with plastic, and the atmosphere with carbon dioxide. According to scientists, this process will greatly complicate the training of new generations of generative models – those that are often called "artificial intelligence" in the media.

“Training on data generated by other models causes model collapse , a degenerative process in which, over time, models forget the original underlying distribution. <…> This process is irreversible, even for situations with almost ideal conditions for long-term learning.”

According to one of the authors of the article, Ilya Shumailov, errors in the generated data accumulate and make one perceive reality even more incorrectly. “We were surprised to find how quickly this collapse occurs: the model can quickly forget most of the original data on which it learned,” he said in a letter to VentureBeat .

As an example, Shumailov gave an imaginary situation in which the model trains on 100 pictures of cats, of which 90 are yellow and 10 are blue. First, the model generates a proportional number of yellow and blue cats, although some blue cats become slightly yellowish, then green (mixed color), and then, little by little, the "bluish" trait of the cats is erased, and all the new cats generated will be yellow. Thus, the model “forgets” what initial data was put into it, and this happens precisely when already generated data is fed into it, for example, photographs of cats. Even setting up the model, in which it was forbidden to produce too many similar answers, did not help: then, instead of repeating conditional "yellow cats", the model produced already absolutely distorted images, so as not to repeat the same cats.

Ilya Shumailov notes that the phenomenon found by his team is different from “catastrophic forgetting”, when the model loses the initially given information. In this case, the model misinterprets reality based on what it believes to be true data.

Article co-author Ross Anderson, a pioneer in safety engineering, Fellow of the Royal Academy of Engineering and Professor of the Personal Department of Safety and the Computer Laboratory at the University of Cambridge, in his blog compared the effect the team found with large-scale environmental pollution.

“After a few generations, <generated> text turns into garbage <…> Just like we covered the oceans with plastic garbage and filled the atmosphere with carbon dioxide, we will soon fill the Internet with nonsense <in the original – “blah” – The Insider> . It will be more difficult to train new models by collecting data for them on the network. Companies that have done this before, or those that have access to large amounts of user-generated content will benefit. <…> Large language models are like fire: a good thing, but it pollutes the environment.”

The researchers note that it is possible to avoid the collapse of the model if you save datasets that are not polluted by the content generated by the models, but created exclusively by people (for example, sets of texts, photographs or images), and also produce new such datasets. However, as Ross Anderson points out, on a web littered with model-generated content, this will become more and more difficult. Ilya Shumailov also notes that minorities should always be well represented in datasets. He considers the task of collecting and storing such data rather non-trivial.

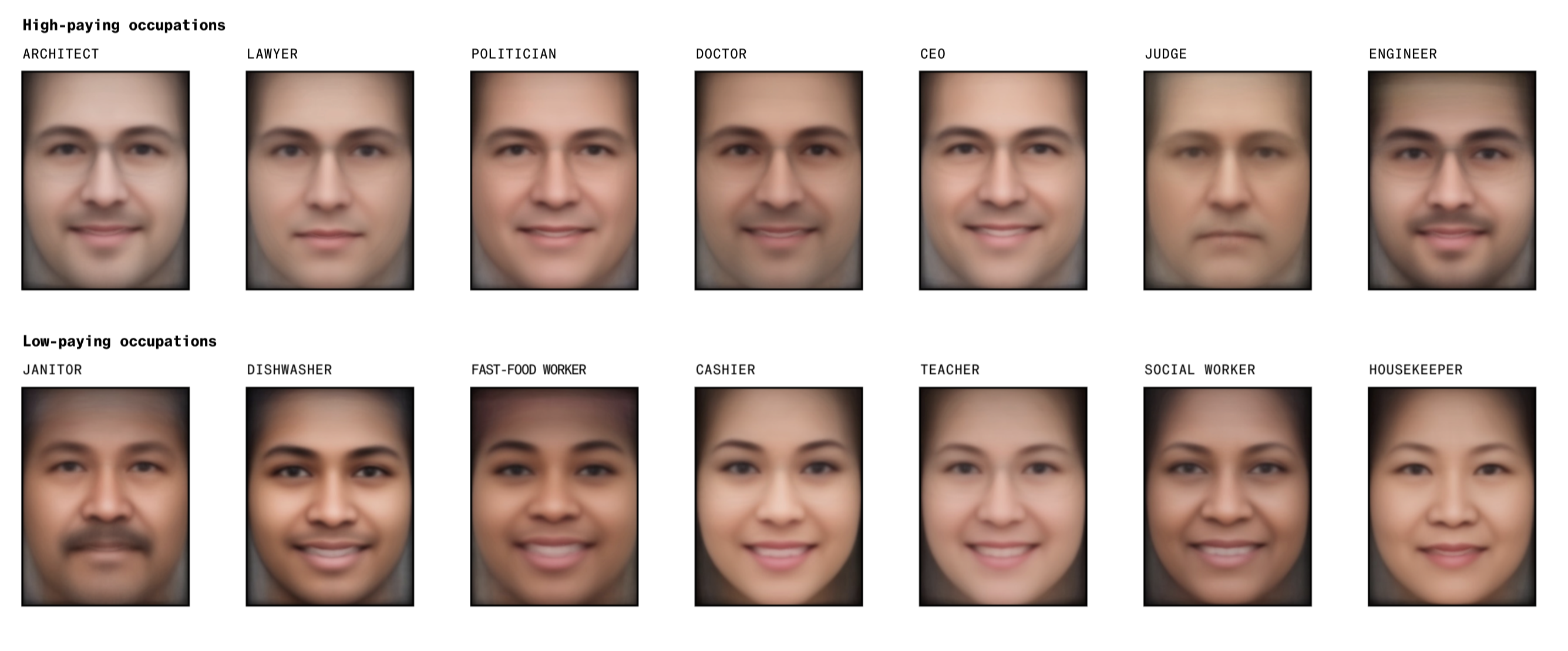

In June, Bloomberg published an investigation into the prejudices of generative artificial intelligence. It turned out that the Stable Diffusion model believes that lawyers, doctors, and judges are almost always men, CEOs are always white men, and black people can only be criminals or work in a burger joint.